How to talk to Generative AI

Now that we have a high-level understanding of how large language models work, we can move to the application that has made them so famous—AI assistants like ChatGPT, Claude, and Gemini. In this section, we will focus on limitations of such models and strategies to overcome these limitations. Generally, this course is tool agnostic, and what you will learn here should be true for any LLM-based application. For completeness, though, it is worth providing a brief overview of the main options available to you.

Starting point

I think the good starting point would be to talk to AI models just as you would talk to a human assistant. Use natural language and be specific in your requests—just as humans can‘t read your mind, neither can AI models. While it’s important to be aware of potential mistakes, I think some people tend to overemphasise this concern. It‘s worth remembering that no source is entirely free from errors: software has bugs, encyclopaedias contain inaccuracies, and making mistakes is fundamentally human.

I have to mention that some critics argue against anthropomorphising chatbots, suggesting we should be very careful about using terms like artificial “intelligence”"there has been little discussion about how <...> the term artificial “intelligence”, has been used to legitimize powerful and false ideologies that serve to diminish human and worker rights“ The brain is a computer is a brain: neuroscience’s internal debate and the social significance of the Computational Metaphor (Baria & Cross, 2021) or saying that these systems “understand””these successes sometimes lead to hype in which these models are being described as “understanding” language“ On Meaning, Form, and Understanding in the Age of Data (Bender & Koller, 2020) or “think”, emphasising that AI models differ profoundly from how humans reason and use languageNoam Chomsky: The False Promise of ChatGPT . Personally, I think that the dangers of anthropomorphising are overstated—humans anthropomorphise everything from natural phenomena to tools in their daily lives without assuming these things are conscious. And as we discussed earlier, the term “artificial intelligence” has existed for more than half a century; I don’t think it’s necessary to rename it now. However, I invite you to explore the provided references to form your own opinion on this topic.

That said, there are of course differences between AI models and humans. Not all of these differences are limitations—some can be advantageous. In this section, you will learn both how to be cautious when working with LLMs and how to benefit from their unique characteristics. Finally, we will discuss specific strategies that can help you interact with these models more effectively.

Benefit from differences from humans

As I have mentioned, not all differences between AI models and humans are negative. AI assistants have vast knowledge, they are non-judgmental and infinitely patient. I have mentioned that it is useful to be specific when talking to AI; however, humans might be annoyed if they think you‘re being overly specific. Models won’t care and it might be useful to provide as many details as possible for your task.

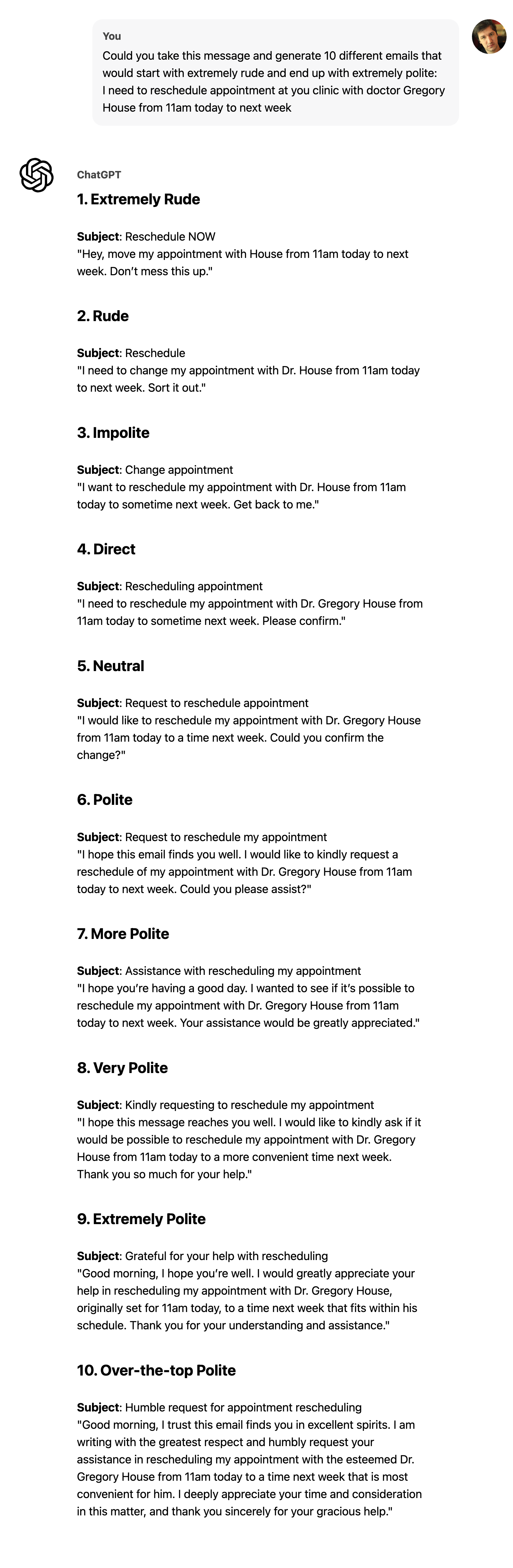

You could also drop some conventional constraints. Sometimes when I write, I can switch to another language if I know the exact expression only in that language—the models will automatically translate it, e.g. ”Please, proofread: I’ve just наткнулся на статью where authors compared <...>“. You can also ask them to generate as many responses as you want, e.g. ”<...> Please provide me with at least 20 options“. One trick that I use is to ask not just for many options, but to generate them along a specific spectrum. This way, I can select the most appropriate version or mix elements from different options to suit my style and purpose. For example, if I want help crafting an email about rescheduling an appointment, I might ask to generate ten answers with increasing levels of politeness. Check out the sample output below.

My overall recommendation would be to remember that you don‘t have to stick to conventional constraints and shouldn’t be afraid to experiment with different types of requests. Let‘s now move to the next section where we will learn about the limitations of LLMs.

Be aware of differences from humans

Large language models often perform as remarkably smart and knowledgeable human-like assistants. However, you should be aware that they can unexpectedly fail at surprisingly simple tasks. In the next video, I discuss the main types of tasks where Generative AI tends to fail. Feel free to watch it at 1.25x or higher speed if you prefer.

Prompt engineering

Some of the weaknesses discussed above can be mitigated with what is called ”prompt engineering". In the next video, I discuss common strategies to improve the performance of LLMs by providing them with better instructions and why you probably shouldn’t worry too much about not being an expert in prompt engineering. Feel free to watch the video at 1.25x or higher speed if you prefer.